Re-Envisioning NAEYC Early Learning Quality Assessment and Accreditation: Advancing Equity and Accessibility

You are here

High-quality early learning experiences contribute to positive long-term outcomes for children. These include increased educational attainment, healthier lifestyles, and more successful careers (Hahn et al. 2016; Hong et al. 2019; Bustamante et al. 2022; CDC OPPE 2023). The goal of NAEYC Early Learning Program Quality Assessment and Accreditation is to help educators and other early learning professionals develop a shared understanding of and commitment to quality. It supports essential elements of a high-quality early learning experience: increased staff morale, greater staff retention, and a more positive, energetic professional atmosphere (Bloom 1996; Whitebook, Sakai, & Howes 2004; Grant, Jeon, & Buettner 2019). It also can help families recognize quality early learning programs, so they can feel comfortable knowing that their children are receiving a high-quality, research-based education that will prepare them for future success.

For more than 40 years, NAEYC has accredited early childhood education programs through a multistep system that includes site visits and scrutiny of class and program portfolios. Now, the association is re-envisioning its early learning quality assessment and accreditation process, based on a commitment to continuous quality improvement, lessons learned from the COVID-19 pandemic, and feedback from the field. This article traces the history of NAEYC Early Learning Program Accreditation, the impetus for change, and how we are using evidence to inform our approach to the new model, which will launch in March 2025 (see “Re-envisioning the NAEYC Quality Assessment and Accreditation System: A Brief Timeline of Recent Efforts” at the end of this article).

A Brief History of NAEYC Early Learning Program Quality Assessment and Accreditation

Forty years ago, NAEYC set out to create an early learning accreditation system to offer programs access to continuous quality improvement. This included the latest research on effective practices, training, technical assistance, and visibility on family-focused search engines. What began as an idea for a child care “center endorsement project” in 1980 has evolved over time. The main goal for accreditation originally was to build consensus in defining quality in a fragmented and largely unregulated field. Highlights of how NAEYC Early Learning Program Accreditation has evolved and adapted historically in response to dynamic circumstances within the broader field include

- reinventing the early learning accreditation system in 2006 in response to overwhelming demand and to set the groundwork for continuous cycles of quality improvement.

- streamlining early learning accreditation content in 2018 to address issues of redundancy. This resulted in a 63 percent decrease in discrete assessment criteria.

Currently, early learning programs seeking accreditation by NAEYC participate in a four-step process. After periods of self-study and self-assessment (steps 1 and 2), programs submit candidacy documentation (step 3) that, if approved, leads to an on-site observational visit (step 4). During the site visit, trained assessors look for program compliance with NAEYC’s Early Childhood Program Standards and Accreditation criteria through observational and written evidence. Evidence gathered during the site visit informs the accreditation decision of “accredit” or “defer.” Programs receiving defer decisions are not awarded NAEYC Early Learning Program Accreditation; this decision implies that the program needs to complete additional quality improvement work before it can meet accreditation standards.

NAEYC is committed to continuous quality improvement. Early learning programs participating in the NAEYC Early Learning Program Accreditation process are expected to routinely examine what they do, how they do it, and to make changes over time toward higher quality practices. Likewise, NAEYC as an organization prioritizes ongoing self-reflection, revising, and refining its Early Learning Quality Assessment and Accreditation system to ensure that it supports positive outcomes for children, families, and early learning professionals. Through study and reflection, the association learned that the systems put in place in 2018 were not ideally equipped to support continuous quality improvement efforts. Information gaps and less than optimal internal support resulted in some erosion of trust in the system. As a result, NAEYC began to embark upon a comprehensive overhaul of processes, content, and technologies. However, in March 2020, the COVID-19 pandemic necessitated halting all in-person accreditation site visits and NAEYC’s budding plans to address issues of transparency.

Innovating During the Pandemic: Provisional Accreditation

Born out of necessity as the world faced an unprecedented global health pandemic, the NAEYC Early Learning Program Accreditation team quickly worked to make changes in processes and decision making. This was a time when the early learning field grappled with previously unthinkable problems and pivoted to redefine what high-quality early learning settings and experiences looked and felt like. NAEYC quickly worked to develop a revised assessment process that would allow for the verification of high-quality early learning practices despite government-mandated travel restrictions and early learning program closures. Likewise, the NAEYC Early Learning Program Accreditation team acted nimbly and decisively to continue to offer reassurance that programs bearing the NAEYC Early Learning Program Accreditation seal could still be relied on as the distinguishing mark of high-quality early learning.

Several options were explored in lieu of in-person observations, including the use of video recordings, remote observation via live streaming, and remote-controlled robotic devices. Following pilot testing of remote observation via live streaming in two volunteer programs, NAEYC determined that it was not possible to conduct the class and program observations with fidelity in this manner. The cost and logistics of quickly acquiring and simultaneously deploying the necessary equipment across the country was the primary barrier in pursuing remote observations. As a result, NAEYC concluded that the best option under these extraordinary circumstances was to decouple the program and classroom portfolio assessment (steps 1 through 3) from the observational assessment (step 4). While the first could be conducted remotely with fidelity in a digital format, the observational assessment still needed to be conducted on-site by a trained and reliable assessor.

This revised assessment and accreditation process allowed NAEYC to grant programs provisional accreditation status based on the review of program and classroom portfolios only. This was considered a first step, known as provisional part 1 (PV1). It came with the requirement that an on-site assessment of observational practices be completed when programs fully reopened and travel restrictions were lifted. This was considered the second step, or provisional part 2 (PV2). Programs that received provisional accreditation were asked to inform families and staff of the provisional nature of the accreditation decision.

Revisiting Re-Envisioning

In the wake of the pandemic, NAEYC has resumed a focus on re-envisioning Early Learning Program Quality Assessment and Accreditation to ensure continuous quality improvement. This entails addressing known issues with capacity, agility, accessibility, equity, and transparency. Toward this end, in 2022 NAEYC embarked on a re-envisioning of its quality assessment and accreditation process to address anecdotal and survey evidence indicating that the system is too complex, complicated, and difficult to navigate. Early learning programs reported to NAEYC that while quality content is strong, the process is inaccessible and burdensome to many. Since then, NAEYC’s Applied Research and Early Learning Program teams have used a data-driven approach to analyze existing data and to gather input from diverse parties to evaluate the quality assessment content and processes through a mixed-method, intentional, and inclusive process. The goal of these efforts is to revise the NAEYC Early Learning Program Quality Assessment and Accreditation system so that it is

- streamlined, collecting information from programs in an efficient and meaningful way that reduces redundancy and improves the measurement of quality

- equitable, ensuring that quality assessment is inclusive so that more early learning programs have access to accreditation

- innovative, linking quality assessment standards and items to the latest research

- aligned, working to reduce the administrative burden for early learning programs and finding ways to connect NAEYC and other systems, such as state licensure, Quality Rating and Improvement Systems, and other national quality assessment systems

- accessible, introducing multiple ways to engage and allowing for programs at all stages of quality improvement to showcase their strengths through a tiered system of quality assessment and accreditation (see “NAEYC’s New Tiered Early Learning Quality Assessment and Accreditation System”)

NAEYC’s New Tiered Early Learning Quality Assessment and Accreditation System

NAEYC’s new model of assessment and accreditation for early learning programs will have three tiers:

- The Recognition tier will serve as an on-ramp for programs interested in program quality. Content related to this tier will focus on identifying the key policy components and baseline practices upon which high-quality early learning experiences and environments can be built. There will be fewer barriers to entry and clearly defined pathways for programs and providers that are interested in becoming accredited. The assessment process for Recognition will be entirely based on document review.

- Accreditation is the second tier. Content will be streamlined to include fewer, but still meaningful and rigorous, assessment items. Additionally, programs and providers will have new opportunities to uplift and highlight their unique characteristics. Like Recognition, a review of documentary evidence will be the basis upon which an accreditation decision is initially granted. However, accredited programs will remain accountable for maintaining high-quality practices over time through ongoing quality assurance measures. These will include unannounced site visits.

- Accreditation+ is the third tier of the new assessment and accreditation model. Its content is the same as the Accreditation tier, but there are higher requirements for ongoing engagement with quality assurance.

What Can We Learn from the Provisional Accreditation Process?

Although born out of necessity in a time of crisis, the provisional accreditation process created something akin to a “natural experiment”: This provided an opportunity to learn more about the overall system and its individual components. It also offered useful insights to inform the goals of the revision process.

Given the known existing challenges with NAEYC Early Learning Program Quality Assessment and Accreditation, the goals for the revision process, and NAEYC’s commitment to continuous learning and improvement, the Applied Research team undertook an evaluation of the provisional process. Their goal was to gather insights to inform the re-envisioned system and content. In particular, the team sought to disentangle what could be gleaned from the documentation of quality separate from a direct, in-person observation of quality. To optimize resources to support access to quality assessment, equity in quality assessment, and the system’s reach, the team sought to understand whether quality practice could be reliably and consistently assessed through documentation. Toward that end, the following research questions were explored:

- Could the assessment of documentary evidence (PV1) be decoupled from the on-site visit of observational evidence (PV2) without compromising the overall measurement of quality practices?

- What could be learned about programs that received provisional accreditation based on documentary evidence (PV1) but were unable to convert that provisional status into a full, five-year accreditation term due to failures during the observational site visit (PV2)?

What Insights Came from This Exploration?

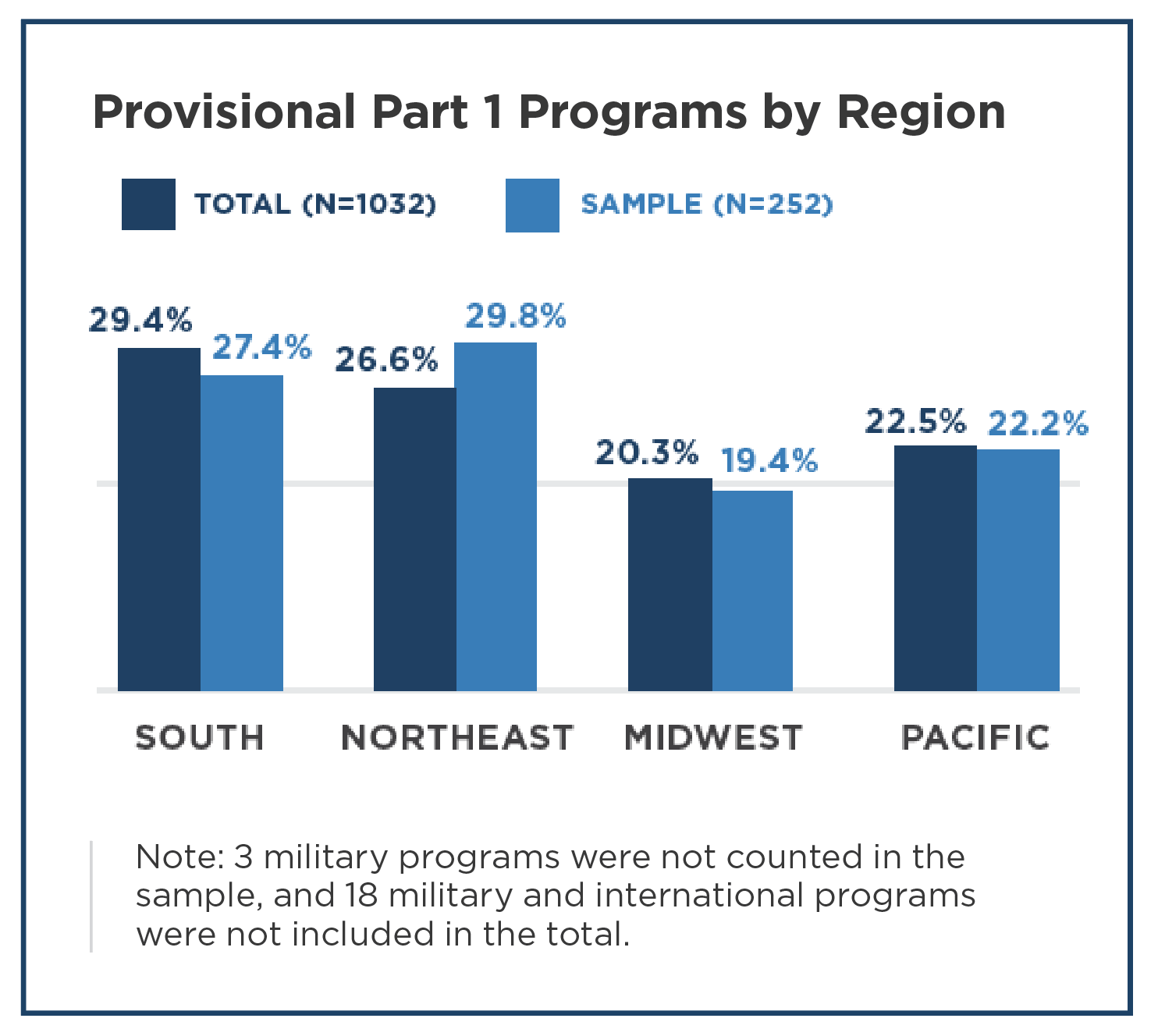

During the provisional process, 1,032 unique accreditation decisions were made based only on program and classroom portfolio review (PV1). For this study, an initial stratified random sample of 282 programs that completed PV1 were selected. A final sample of 252 accreditation decisions where programs completed both the portfolio review (PV1) and the observation component (PV2) was selected for purposes of analysis to represent the larger population. (For more information on the sampling methodology, please email [email protected].) The sample size used balanced pragmatism and efficiency with sufficient power to detect a statistically significant relationship assuming a confidence level of 95 percent. The random sample was selected to ensure that the number of programs that achieved accreditation and the number deferred aligned with the overall accreditation rate of the population of interest.

The sample included programs across 40 states, Washington, DC, and two foreign territories (see “Provisional Part 1 Programs by Region”). Geographically, the sample was representative of the population in alignment with the US Census Regions and Divisions. Attempts were also made to ensure that the sample reflected the larger population in terms of systemwide program portfolio use, which allows organizations that have a large number of related programs in the early learning accreditation system to streamline their program portfolios through a process of preapproved, organization-wide evidence.

To address the study’s two research questions, the Applied Research team relied upon a mixed-methods approach, combining both correlational statistical analyses and a deep qualitative review of the PV2 observation assessment materials for verification. First, they compared overall scores and pass rates from PV1 and PV2 to understand the strength and direction of the relationship between these two scores. Next, they closely examined the qualities of programs that passed PV1 but failed PV2. Assessors’ notes from observational site visits were used at this step and served a twofold purpose: to understand factors that led to failures in PV2 and to identify ways to enhance a system that may not require on-site observational assessment to issue accreditation decisions.

These analyses revealed a strong, positive, statistically significant correlation between PV1 and PV2 overall scores. The vast majority of programs that passed PV1 based on documentary evidence went on to successfully convert their provisional accreditation into a full five-year term following an on-site observational visit (PV2). Three programs failed PV2 despite passing PV1. This was because they failed to meet a particular standard or item(s), or they failed to attain the minimum overall score necessary for passage. Six programs did not participate in PV2 because they had closed, voluntarily withdrawn, or had their provisionally granted accreditation revoked due to compliance issues with required assessment items.

What Are the Implications of These Findings for Work Moving Forward?

As NAEYC’s Early Learning Quality Assessment and Accreditation system undergoes a historic revisionary improvement, this analysis of provisional accreditation decisions supports the following changes:

As NAEYC’s Early Learning Quality Assessment and Accreditation system undergoes a historic revisionary improvement, this analysis of provisional accreditation decisions supports the following changes:

- Decoupling the portfolio assessment from the observation assessment and re-envisioning how documentary evidence is collected, organized, and evaluated. Nearly all provisionally accredited programs successfully converted their provisionally granted accreditation status into a full, five-year term following the on-site observational assessment. It is therefore feasible to pursue further exploration of issuing an accreditation decision based on portfolio evidence alone and relying on verification of practices on a random, unannounced basis.

- Continuous quality assurance practices throughout the entire accreditation term, which would include random, unannounced visits. Three programs did not pass their on-site observational assessments after receiving provisional accreditation. Thus, as NAEYC explores and implements a new tiered quality assessment and accreditation system, it will be critical to evaluate the effectiveness of existing quality assurance and fidelity processes and procedures and consider novel ways to ensure that high-quality early learning practices are taking place on an ongoing basis.

This analysis is one of many efforts NAEYC has initiated over the past year to determine how best to revise its Early Learning Program Quality Assessment and Accreditation system, but it is not without limitations. Programs deferred in PV1 did not receive a site visit during PV2. While this is NAEYC’s standard protocol, it is unknown how these programs might have performed observationally since no site visits were scheduled. In addition, because site visits are only conducted for programs that receive passing scores from portfolio reviews, assessors are not blind to a program’s provisional status. This could potentially introduce bias into their observational assessments.

What Is Next?

For 40 years, NAEYC Early Learning Program Accreditation was rooted in the idea that on-site observation is essential to accurately measure quality. The COVID-19 pandemic challenged us to think differently about this belief. Without that experience, we likely would never have considered a different possibility. The provisional accreditation process provided NAEYC with the data we needed to be innovative and to consider an accreditation process that provides the flexibility that programs desire without compromising the quality that NAEYC Early Learning Program Accreditation represents.

The data presented in this article have given NAEYC the ability to consider new possibilities for assessing quality in early learning programs that align with the goals of equity, accessibility, and simplicity—the factors that are driving our revisions. By rethinking the role of the on-site visit, NAEYC will be able to thoughtfully consider a fee structure that better meets the needs of programs. The updated model will also provide additional opportunities for states and other systems to engage more explicitly with NAEYC Early Learning Program Quality Assessment and Accreditation. This will provide a more aligned experience for early learning programs.

Early learning programs that are interested in learning more about the changes to quality assessment and accreditation can access the information via NAEYC’s website at NAEYC.org/accreditation/early-learning-program-accreditation. As we move closer to the March 2025 launch date, additional resources will be available to support currently accredited programs as well as those new to quality assessment and accreditation. We hope that these changes will result in an increased interest in quality assessment and accreditation from programs across the country, which will in turn lead to greater levels of access to high-quality early learning for young children and their families.

Amanda Batts, Meghan Salas Atwell, Alissa Mwenelupembe, Elizabeth Anthony, Ashraf Alnajjar, Kim Hodge, and Jesse Ritenour contributed to this article.

Photograph: © Getty Images

Copyright © 2024 by the National Association for the Education of Young Children. See Permissions and Reprints online at NAEYC.org/resources/permissions.

References

Bloom, P.J. 1996. “The Quality of Work Life in Early Childhood Programs: Does Accreditation Make a Difference?” In NAEYC Accreditation: A Decade of Learning and the Years Ahead, eds. S. Bredekamp & B.A. Willer, 13–24. Washington, DC: NAEYC.

Bustamante, A.S., E. Dearing, H.D. Zachrisson, & L. Vandell. 2022. “Adult Outcomes of Sustained High-Quality Early Child Care and Education: Do They Vary by Family Income?” Child Development 93 (2): 502–23.

CDC OPPE (Centers for Disease Control Office of Policy, Performance, and Evaluation). 2023. “Early Childhood Education: Health Impact in 5 Years.” archive.cdc.gov/www_cdc_gov/policy/hi5/earlychildhoodeducation/index.html.

Grant, A.A., L. Jeon, & C.K. Buettner. 2019. “Relating Early Childhood Teachers’ Working Conditions and Well-Being to Their Turnover Intentions.” Educational Psychology 39 (3): 294–312.

Hahn, R.A., W.S. Barnett, J.A. Knopf, B.I. Truman, R.L. Johnson, et al. 2016. “Early Childhood Education to Promote Health Equity: A Community Guide Systematic Review.” Journal of Public Health Management and Practice 22 (5): E1–8.

Hong, S.L.S., T.J. Sabol, M.R. Burchinal, L. Tarullo, M. Zaslow, et al. 2019. “ECE Quality Indicators and Child Outcomes: Analyses of Six Large Child Care Studies.” Early Childhood Research Quarterly 49 (4): 202–17.

Whitebook, M., L.M. Sakai, & C. Howes. 2004. “Improving and Sustaining Center Quality: The Role of NAEYC Accreditation and Staff Stability.” Early Education and Development 15 (3): 305–26.